A 2019 Statista survey of nearly 2 thousand US voters found that only 72% agreed that vaccines should be mandated for children. A very similar percentage was announced in December 2020 in a KFF COVID-19 Vaccine Monitor survey showing that 71% would “definitely or probably get a vaccine for COVID-19 if it was determined to be safe by scientists and available for free to everyone who wanted it.” But surveying more recent reports online in 2021 quickly shows a range from 20% to nearly 50% for COVID-19 vaccine hesitancy. In the face of overwhelmingly unified statements in media stating that the scientific community unequivocally backs vaccine safety, what explains the substantial groups that remain as naysayers? A portion of these are minorities still stung by histories of having been abused, neglected or both by medical communities, but not all. Many are front-line workers in nursing homes and hospitals. The most recent poll, from early March 2021, found that more than 40% of Republicans were not planning on being vaccinated against COVD-19.

One standard answer: anti-vaxxer irrationality backed by shoddy research spread through social media by irresponsible, rogue scientists and others wishing to profit from creating public doubt. Again, why is it that whacky conspiracy theories and alternative universe pseudo-science are seducing people away from sane scientific certainty? Why are so many making or considering choices that threaten to block entrance to the promised land of herd immunity for the entire nation?

Our vaunted objective science, the one that has brought us countless marvels in medicine, industry and technology, has roots in Sir Francis Bacon’s (1561-1626) efforts to clean up the “quest for knowledge.” His solution to the vulnerability of human thinking to error was to expunge unreliable elements. He rejected anything derived from past authorities (e.g., Galen, Aristotle), superstitions, and personal preferences, while voicing confidence only in that which can be measured and counted. John Locke (1632-1704), soon after, differentiated between an object’s subjective qualities, those dependent on the senses of the person perceiving them (color, smell, taste and sound), and objective ones inherent to the object, itself (solidity, extension, motion, number and shape). Only the objective ones qualify for scientific inquiry.

The results were impressive, and the venture progressed rapidly along a path toward portraying all phenomena as interactions of particles and electro-chemical forces. It established a paradigm that depicted the human body as a very complicated machine. Last traces of Aristotle’s assertion that a vital principle differentiated the living from the non-living persisted, before falling under the wheels of the juggernaut, late into the 19th century.

Watson and Crick’s Nobel Prize winning identification of the DNA double-helix at the core of the living cell can be seen as an endpoint to this project. The genetic code in that spiralling DNA was billed as the master controller of biologic form and functioning. But Francis Crick didn’t stop there, and he took, as his next grand project, the investigation of consciousness. His research led him to proclaim (condensed here): “You, your joys and sorrows…[and] your sense of personal identity are in fact no more than… a pack of neurons.” He was, in essence, declaring “the self” to be nothing more than an epiphenomenon—a shadowy sideshow that appears on stage but does not really cause anything. The neurons, biological compound molecules, and their electro-chemical charges are what’s real, and their configurations are the result of nature’s random, natural selection processes. Crick’s line of thought was carried further by prominent German neurophysiologist, Wolf Singer (b. 1943) who declared emphatically that we humans should avoid talking about the virtues of personalities because “…We are determined by circuits” and should therefore rather celebrate “…cognitive achievements of human brains.” This Crick-Wolf line of thought, one that has been taken up widely in the scientific community, says that matter and energy are everything.

Around 1610, when that line of thought was first getting up on legs, older lines were still quite strong for some, and poet (and later Anglican priest) John Donne described reason as “God’s viceroy in us.” Reason and faith had not yet fully gone their divergent ways. It may seem ironic that what started out as an effort to make thinking more rigorous and true in the service of science by casting out unreliable human tendencies--ended up trivializing the knowledge quest itself by calling it nothing more than an electro-chemical process impelled by survival-of-the-fittest ala Darwin.

So, the entire universe is just atomic particles and energy. But what if it isn’t? What if throwing ourselves out the door is a sacrifice too far? In the Old Testament story, when Abraham, at God’s command, is about to sacrifice Isaac, his first born, he is stopped by the angel of the Lord calling out his name and telling him to substitute a ram for Isaac. But who or what will stop this expulsion of our very selves from the prevailing conception of the universe—which is, after all, our home?

Here comes my favorite drug to the rescue—after a brief word about the one thing that neither Bacon nor Locke thought of excluding from the scientific pursuit of knowledge: cash. Everyone knows that it takes money to do science, and everyone knows about the corrupting influence of the promise of profit. In the 16-17th centuries, however, that hadn’t surfaced as an issue. In our time, on the medical side of science, we have the NIH, the CDC and the FDA—all of which are charged with monitoring and protecting the population’s health, and for keeping the data needed for addressing health challenges free of corrupting influences. No small feat given that a huge percentage of the clinical data used for evaluating drugs and medical devices is coming from a pharmaceutical industry led by CEOS who are, in turn, governed by a legally enshrined obligation to satisfy shareholders first and foremost.

Beyond whatever vigilance these federal agencies may maintain, there has been an organization that for the last 28 years has taken upon itself the task of rating the scientific validity of the clinical trials that guide drug approvals and healthcare advances. Originally known as the Cochrane Collaboration (now just as “Cochrane”), it is a non-profit corporation, self-described on its website by the statement: “…internationally recognized as the benchmark for high-quality information about the effectiveness of health care.” Its mission is “to promote evidence-informed health decision-making by producing high-quality, relevant, accessible systematic reviews...”

Are their systematic reviews inoculated against the corrupting influence of cash? The Cochrane policy on conflicts of interest, spelled out clearly in about three thousand words on their website, I found to be surprising. When I was doing background research for a piece on vaccine safety, I looked into the list of reviewers on a journal article (Drolet, et al., The Lancet 2019) assessing overall HPV (human papilloma virus) vaccine efficacy in clinical trials. I did a quick count of the number of reviewers who had, as required, disclosed their potential conflicts of interest. Out of forty-six reviewers, twenty-three had declared conflicts of one kind or another—which led me to look into the Cochrane policy on review authors and found it to state: “there must be a majority of non-conflicted authors for any particular review.” Pretty close. More recently, after I described this policy to a nephew who was trying to piece out mRNA vaccine safety for himself and his family, he did his own online research. He informed me of a recent change in Cochrane policy as of 2020 stated as follows: “The proportion of conflict free authors in a team will increase from a simple majority to a proportion of 66% or more.” Only a third? How important is that 34% with conflicts of interest? Most of the conflicts are on the inconsequential side, trivial in the grand scheme of things. After all, to be invited as a reviewer of a particular clinical trial, you have to be a highly trained clinician or researcher with some expertise in the relevant field, no? Not some amateur easily swayed by subconscious motives.

And with subconscious motives, now we’re really on the trail of my favorite drug—which necessitates a bit more history, this time on the placebo effect.

The initial work on placebos (pills containing only sugar or the like, or fake procedures) reflects a degree of embarrassment at the phenomenon. The expression “It’s all in your head” captures the mood. Why? Because placebo effects were seen as implying mental weakness and suggestibility. Placebos were described in a Journal of the Medical Association article back in the mid-1950s as useful tools for “harassed doctors dealing with the neurotic patient.” Not only were placebo effects found to be common in research experiments, they were strong, often in the 30% range—making it difficult for “real” drugs being compared to them to look good. Recent research at Harvard, one of the hubs of placebo research, showed that when patients were given a placebo and told that they were given a placebo with no real therapeutic value, they still reported feeling better than those getting nothing at all!

Only gradually with the appearance of natural medicine/natural food movements has the term “mind-body” overtaken dismissive characterizations. Placebo effects are now a respectable field of research. Their vast implications, however, which encompass everything from the physician’s tone of voice to the wallpaper in her/his office, are insufficiently taken into account. At the mega-multi-billion dollar banquets of clinical research and clinical practice, placebo effects still sit at the kid’s table.

The reason is clear: our scientific/medical model is a “reductionist” one. Crick’s “you’re only a pack of neurons,” along with the dissing of placebo effects, were both firmly in tune with that model. That model’s suggestion of strict genetic determinism hasn’t held up as well as expected. The Human Genome Project found 30,000 human genes, barely more than the 26,000 in worms, and paltry compared to the 39,000 in water fleas and 50,000 in rice. The notion of a typewriter-like conveying of instructions for assembling proteins upward from the DNA in the cell nucleus has been supplanted by a growing recognition of epigenetic factors (epigenetics: the study of the interplay between environment, behavior and gene expression) coming to the fore and revealing numerous complex interrelationships within the minute space of the cell nucleus. To the question “If genes aren’t the master controllers at the core of the cell, what the hell are they?” responses have included a range of metaphors from “database” to “tool shed” to “pantry” to “seeds,” with the processes and their interactions depicted sometimes as “a dance.” You would be fully justified to wonder, “Who or what is doing all of this?”

So how would a new model look?

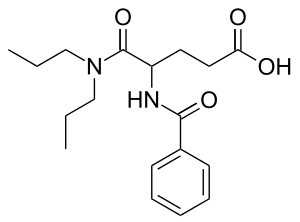

But first, as promised, our favorite drug: proglumide. Proglumide is an old drug first approved by the FDA around forty years ago. It still has a role with opioid analgesics, pain killers—with an extremely distinctive feature: it only works if you know you’ve gotten it. Let’s say you’ve undergone a surgical procedure and you’re in the recovery room with an intravenous (iv) line hooked up to a bag on a pole you can’t see. You rate your severe post-surgical pain high as an “8” out of a possible “10.” What would your likely pain rating be if the nurse secretly adds a bag of saline solution (a placebo) to your iv line? Or a bag with proglumide? The surprising answer: the same 8 for both; just as you’d expect with an unannounced placebo, also zilch with the secretly given drug. What if the nurse says “I’m giving you pain medicine”? You’re likely then to rate your pain with placebo at 6 (30% or so) and a much greater reduction, let’s say 2, with proglumide. What’s going on? Morphine works whether you know you’ve been given it or not and placebo effects have nothing to do with the substance you’re given. Proglumide, though, is a drug with specific effects occurring through what’s termed “expectation pathways” in the brain. These are chemical effects on pain receptors that have to be stimulated by an event in consciousness with understanding. Someone tells you, you hear it and get what the words mean. That’s the really important, unique feature.

That understanding to Crick, theorizing out of the old model, was a nothing, an epiphenomenon, an insignificant brain fart—a byproduct of the functioning of the organism that’s noticeable but hardly noteworthy. Crick, I’d bet, didn’t know about proglumide.

There’s more. Einstein, too, was struck by this same understanding thingamabob. He wrote in Physics and Reality, “The eternal mystery of the world is its comprehensibility.” His model, too, was one of matter and forces. And that led him to very serious indigestion when other pioneering physicists, like Niels Bohr, brought another element into explanations for the mystifying features of quantum mechanics which was upsetting what we can call the classical Newtonian “billiard ball physics” of predictable interactions between matter and energy.

What was so unsettling? The discovery that light can manifest as both wave and particle, that in the atom, energy is distributed in a non-continuous “quantized” manner, and that in the quantum world, strange-seeming phenomena appear both theoretically and in actual repeatedly verified observations. For example, with quantum nonlocality and entanglement, objects seem instantaneously to know about each other's state, even when separated by large distances. Widely separated entangled photons, while rushing away from each other at light’s stupendous speed, remain connected so that a nudge to one is instantaneously mirrored by a change in the other. And, there’s Schroedinger’s theoretical cat in a box, both dead and alive until someone checks in on it—embodying the notion, so stupendously radical, that in the subatomic world, things may exist in many potential states at once. They only precipitate out as a specific one—when they are measured. This notion, articulated by Bohr, horrified Einstein (“You’re telling that if I’m not looking at the moon, it isn’t there?”).

In many widely held interpretations of quantum reality, this is still central—the notion that conscious measurement locks the options of potential reality down to the specific world we experience. Measurement requires an understanding consciousness—a someone with an ability to hold the ruler and take a look and notice. A scientist, for example.

So, when a tree falls in the forest, is there a sound…? The old conundrum. True, there are processes that unfold in the forest without consciousness. But then (and here we ask Dr. Einstein to take a seat and listen), then there’s no there there. Animals surely have awareness and experiences. But when you point to something with your finger, your dog looks at your finger, not where you’re pointing. For there to be a “there,” you need a here with a someone with a particular kind of consciousness—one that recognizes concepts as concepts. Homo sapiens: wise human or rational human or thinking human. Yes, higher animals exhibit all kinds of marvelous understandings—but they don’t constate things and publish, they don’t hand out certificates, they don’t require and issue licenses (or give out parking tickets).

And here we are, having been stuck with a science that has been, like a plane nosediving into the ocean, hellbent toward exterminating the basis for its own existence—the understanding capacity of the scientist, and the understanding, itself. The fool’s errand of claiming that understanding is a mechanical process was a great gift, because it exposes the fallacy. Mechanical process? Ask any parent. Ask any teacher. The reality of experience defies “billiard ball” physics. You can’t explain understanding without using it; you can’t explain experience without using it. These are primary. They are not made of parts. You need certain parts to have them (a body, a brain, a mind), but understanding and experience--they aren’t made of those parts. Abstract theories to the contrary dissolve in the fluid heat of honest thinking.

So, if we stop fighting this “right in front of your nose” fact, is that the end of science? Are we condemned to ouija boards and interpreting the form of sacrificed animal entrails to look for truth and make predictions? No. But science has to open its borders. It has to, in a sense--grow up.

Grow up? Yes. An analogous history can be traced in biology. After getting rid of the vital principle, the reductionists’ next target was purpose. Soon, Darwin’s random natural selection picture of evolution became entrenched and mandatory, and attributing purpose other than survival to beings or things in nature became a strictly enforced taboo. Those who strayed invited scathing accusations of anthropomorphizing, of projecting human subjective traits onto objective phenomena. Wouldn’t a bright red cardinal have better survival odds with a camo pattern on its feathers? No, because then the less flamboyant female wouldn’t be attracted to his blazing redness—and then he would perish without passing on his genes--or some such formulation. We have long been subjected to the contortions of biologists mashing and shoehorning all their observations toward the same dreary task: explain how this feature or that serves survival. Well, exasperation could dryly state that a stone perched out in infinite space survives just fine. Why bother with all this blooming diversity?

A different idea is, shall we say with hope, ascendant. Open systems biology focuses on the interrelationships of organisms within their environment. Some open systems biologists are not hide-bound to a Lego science of parts and causation from below. They look at—well—at systems, and recognize a hierarchy of them in living organisms. You can pass across levels upward or downward, from atoms to molecules to macromolecules to organelles to cells to organs to organ systems and then to organisms. Interrelationships make systems more than the sum of their parts at each level. Also, as you ascend levels, you see that entirely new primary phenomena emerge, phenomena that are not in the least predictable from the assortment of parts in the level below. In going from the atomic level of elements where you have hydrogen and oxygen as parts, there’s no predicting water as a result of their combination. The parts, the two gases, disappear when the new phenomenon of water emerges. No one takes a sip and says, “this water is a little light on hydrogen.” At the moment you die, your body descends from a level where laws pertaining to life processes and rhythms (respiration, circulation, digestion, reproduction, etc.) are operant and prevail, to one where mineral laws of chemical accretion and decomposition prevail. Each level has its own features and laws, and while there are interactions within and between levels, the higher level organizes the lower, orders the interactions of the parts that it needs. Pioneering cell biologist Paul A. Weiss, PhD (d.1989), a recipient of the National Medal of Science who established principles of cellular self-organization, declared that in a biological system “the structure of the whole coordinates the play of the parts; in the machine the operation of the parts determine the outcome.” But even if we look at our automobiles with their roughly 30,000 parts, we see that while the parts make up organ-like subsystems--the engine, the drive train, steering, braking, fueling, cooling, seating and so on--the principle that unites those subsystems and makes a whole out of them is the car concept, itself, and its utility. Yes, this sounds like a return to Aristotle’s formal cause.

When Dr. Einstein complained about the moon’s needing his consciousness to be there, he was speaking out of an ordinary picturing of the moon out in the space of a cosmos populated only by stuff and energy. But where was his genius? Not the primal relationships (E=mc2), but his gift for grasping and communicating them? It was, for certain, nowhere to be found in the equation. That’s the glory of our ability to think abstractly. Through it, we fulfill the Bacon/Locke aim of removing ourselves to achieve a purified and strengthened thinking. But in doing so we forget—forget the fact that it is we who are doing so, doing the thinking. It’s the baby with the bathwater thing. And that’s how we ended up being surprised by proglumide and by its clear message, and by the guy in a white coat who wants to celebrate “the achievements of human brains.” While understanding has correlates in the brain that light up on PET scans, and what’s going on in the brain can impact the body, the experience of understanding is at least as much a part of the world as the neurons, and has enormously greater power to affect the outer world. We noticed proglumide’s little quirk and wrote it down in Wikipedia, but lost in our fascination with our multi-part cars and computers, drove right past its broad implication--that human consciousness and the drive toward understanding are fundamental to the world’s and our own evolving.

Our arrival at the evolutionary stage where we gained the ability to think abstractly, to step outside the world for a moment and concoct theories, is a morally fraught one. It may be no coincidence that in the same era that we have begun to unleash the power of the atom, we are also gaining glimpses into the role that human relationships play in health and illness. That as we are just starting to unravel the complex codes of biological life, we’re also confronted with the terrible cost to the earth and our human communities of uncontrolled technologies and short-sighted, short-term economic gain. Vandana Shiva, Indian physicist, multi-activist, feminist and vocal opponent of commercializing water and patenting of seeds, did her PhD thesis on quantum theory, on non-separability and on non-locality. She explained in a video interview (https://www.youtube.com/watch?v=fG17oEsQiEw) with Bill Moyers: [condensed/paraphrased] “That basically means that everything is connected. The industrial revolution and the scientific revolution gave us a very mechanistic idea of the universe. First we are told that nature is dead…that everything is this hard matter. [That idea]…is still guiding a lot of science. Genetic engineering is based on that hard matter, genes in isolation, genes determining everything. There’s a master molecule that gives orders–old patriarchal stuff [Moyers laughs]. The real science is the science of interconnectedness, of non-separation, that everything is related.” When Moyers challenged her saying that economic globalization is said to offer high levels of interconnectedness, she replied, “This is not interconnectedness at an ecological level. It’s extremely artificial corporate rule on a planetary scale.”

I was impressed, that like me, Dr. Shiva connects the dots, linking quantum phenomena to a needed revolution in thinking. “All that’s flowing around is commodities that don’t really have to be moving. You load the ships from China going here with cheap consumer products for Walmart made by slave labor. The transformation of the earth into commodities that flow leads to disconnection. This interconnectedness of the world through greed excludes people, kills their humanity, and leads to human divisions and a rise in conflict of every kind. What we see is a drop in the sense of common humanity and global consciousness. It’s still the old billiard ball model of separate particles.”

And there are more dots to connect—tying in our favorite drug that needs human understanding to be activated, and the systems biology of layered levels of laws, separate and interconnected, with the whole playing the tune so the parts can dance together.

Let’s detour back to COVID-19 and those so-called vaccine-hesitant anti-science types. There’s a natural conflict underlying the dilemma they pose. It’s the conflict between the notion that public health messaging must be simple and consistent to be effective and the principle that the bedrock of real government credibility and public trust is open public debate. Have you noticed the complete lack of public debate on the COVID-19 vaccines? Virologists who can speak ordinary language weighing the issues out in the open? Experts debating mRNA vaccine safety, frequency of side effects, rates of mortality and morbidity, testing and reporting reliability--with fact checkers on hand? Believe me, they’re out there from all sides, dripping with MDs, PhDs, and MPHs. But the major media outlets and the powers behind them have made a firm decision, either tacitly and non-coordinated or outright conspiratorial, that “speaking openly equals death.” And that justifies brutally assaulting the messengers before they can even open their mouths. You end up having warring cults, with reason a mere slave to ideology. Don’t you know that the hordes charging up the Capitol steps thought they were saving the nation? How that detachment from fact occurred is the deeper challenge for the nation’s healers at all levels. More people than you might think, whose lives and livelihoods have brought them into proximity with the wielders of outsized power, worry that despite the nice talking heads, objectivity in the media has been drained away. More people than you think, suspecting that the airwaves are owned by people selling a story, wish they had a place to turn to for unvarnished truth. The thought may well arise: Theoretically, it’s possible that peak profitability and true compassion are flowing out of the same spigot, but it’s also possible, when you follow the money, you’ll see that it’s insider trading on a massive scale, with saving the world as the sideshow. Whether those with the most control are deceivers or simply deluded hardly matters.

The dictum is: “Never waste a crisis.” Who will this one end up serving? The messages here tear at the old firewalls between inner and outer worlds. In the public arena, the doors between fact and fiction have been blown off their hinges, while the pandemic still rages and the death toll mounts. Winning back shattered trust is a hard journey, but it’s the good journey. Forget the old normal. We need a new one recentered on the kind of healthy understanding that replaces despoilers and opportunists with citizens and guardians.

The question sits out there with startling clarity: Does achieving herd immunity more quickly justify enforcing herd mentality? Recent events and knowing the mindset that got us here say no.

March 2021